Showing A Computer How To Sketch

A sketch is a compilation of shape and motion from the perspective of the

artist creating it. Typically a sketch will be made up of lines representing

the edges of objects and the boundaries between shapes. It contains most of

the details important in percieving the vision of the concept itself.

How, then, can a computer generate a sketch which mimics that of a human

creation?

The short answer is: it can't. A computer has no awareness of human

perception, nor does it have any sort of conceptual vision in which to apply

to this perception. A computer does, however, have tremendous analytical power.

It can simulate the concepts a human uses to create a sketch by analyzing

the edges and directions present in an image, in much the same way our own brains do --

at the most basic level.

Our visual system is highly complex, comprised of billions of neurons with trillions of

connections. Each one of these neurons is wired up and 'trained' to respond to a certain

stimulus and only a certain stimulus. Many neurons in the lower levels of the visual cortex

are wired to respond only to edges of a certain orientation, in a certain location. These

neurons literally do edge detection, and furthermore, are used to tell which direction that

edge is oriented. This is what gives us our primary perception of shapes and boundaries.

Naturally, this seems like a logical place to start training a computer to see like a

human, and in fact, it is the only place I'm focusing on for the duration of this article.

In order to simulate the ability for humans to detect edges and directions in such a way, I

will be using an analysis technique called Digital Signal Processing, or DSP. In DSP, a

specific set of values, called the coefficients, are applied to all parts of an image.

Certain features of the image, in certain places, will have a 'reaction' with these values.

This process is known as convolution, and is widely used in computer graphics as a method

to do all sorts of image processing techniques. Some of the more common types of

convolution include blurring, sharpening, and embossing.

When doing convolution with an image, the coefficients are usually referred to as a matrix, since

the values are oriented in 2D. The values in the matrix are the most important part of determining

what the convolution will produce. In a sharpening matrix, the center value is usually very high, while

the surrounding values are slightly below 0 (-1 or -2). This causes the differences between pixels to be

amplified. In a blurring matrix, the center value is low, with the surrounding values tapering off to zero.

The size and rate the values fall off from the center defines the width and 'shape' of the blur.

The type of convolution matrix I'm using is referred to as a Gabor filter, and is primarily used to do feature

detection by responding only to features of a certain size, and of a certain orientation. The values in a Gabor

matrix are made of a sine wave multiplied by a gaussian function, also known as a bell curve.

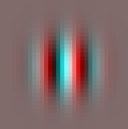

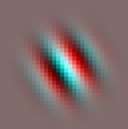

(Gabor filters at 0, 33.75, and 90 degree orientations)

These gabor filters are actually made up of a sine and a cosine wave (red and cyan). The phases of these waveforms are offset by 90 degrees and make this into an orthogonal filter. The theory behind this is a little complex, but it is very much related to the reason the X and Y axes are orthogonal on a 2D grid of pixels.

The sine and cosine parts of the Gabor filter are rotated so that they will detect features parallel to their orientation. This is where the filter gets its orientation-detecting characteristic. It is actually commonly believed that our own brains use this filtering method, or one very similar.

Directionality

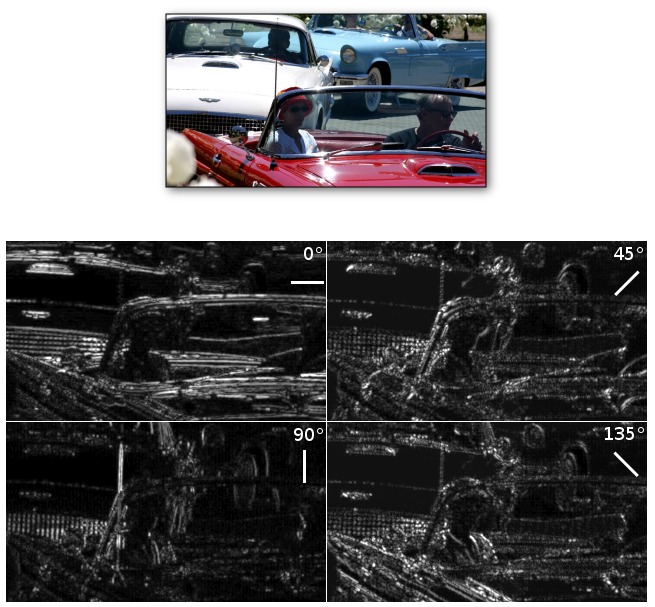

The first steps the program takes in finding the directionality of an image is to convolve the image with

the Gabor filter in 16 different orientations between 0 and 180 degrees. This produces 16 separate

images, one for each orientation of the filter. The pixels in each of these 16 images are grayscale values

representing the amount of response each area got from the filter. Areas which have a strong response

will be bright, indicating a strong presense of an edge with an orientation very close to the filter's.

Below are four of these images, for only four orientations of the gabor filter -- 0, 45, 90, and 135 degrees:

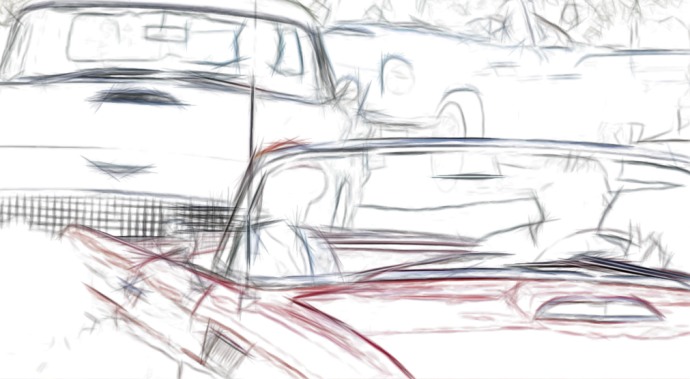

Now, for every pixel in the original image, there are sixteen filtered pixels, each one representing the

directionality of the original pixel's area. By selecting one of the sixteen filtered pixels which is

brightest, you can find which direction that pixel, or area, in the original image is oriented. If it is

a particularly strong orientation (very bright pixel), a brush stroke can be made over that pixel. The length

of the stroke may be tied to the strength of the orientation, it may be fixed, or it may be a combination of

both.

The result, when using the source image's color for the stroke colors, resembles a quick colored pencil sketch:

Variations

An oil pastel effect can be created simply by shrinking the filter size, in order to catch

smaller details, and using a 50% gray background, so dark and light strokes both show up

equally.

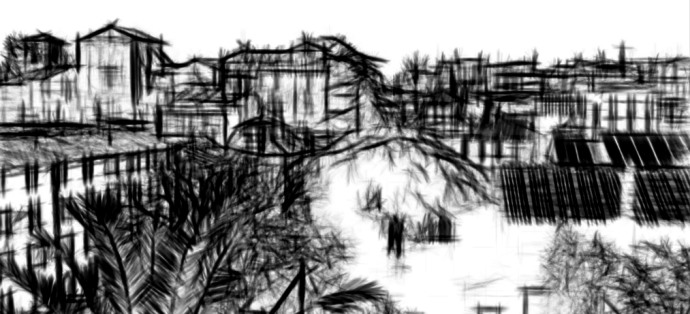

With a similar technique, painterly-style effects can also be rendered. The brush strokes used here are all merely 1-pixel anti-aliased lines. This is a combination of sketches from several different scales. Artists typically paint in this manner, first starting with large, blobby details, then gradually working finer and finer. By varying the size of the filter, you can catch larger or smaller details. If you catch a series of different sizes of details, you can make a composite image which, like the one above, appears to be a painting. This particular painting was made by convolving the image first with a 96x96 filter, then a 48x48, then 24x24, then 12x12.

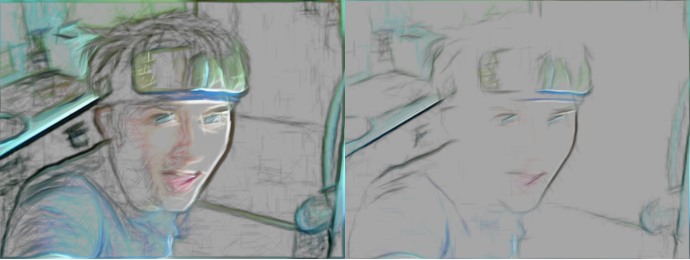

A final faster variation of the previous method is to use a gaussian-blurred version of the original image as a background for the pastel sketch. This has several drawbacks, however. Most noticably are the halos visible around object bounaries.

Gallery

President Reagan is happy!

A photograph a friend took in Rome, charcoal style.

A rock bridge done in pastel mode with a fixed line length (30px)

My friend Derrick wearing a Naruto headband. Yes, you may feel sorry for him. Two different filter sizes used here.

This one was done with the gaussian blurred background method. The tree bark is interesting.

Going for a rough charcoal look here.

Lab

Lab